Anandtech le dit haut et fort : les développeurs qui ont pu manipuler les SdK de la PS3 et de la Xbox 360 trouvent que les CPU de ces consoles sont loins de répondre à leurs attentes. Pour le coup, Anandtech se fait immédiatement censurer et retire son article… En résumé, il expliquait que les performances véritables du CPU de la Xbox 360 (le Xenon) sont environ deux fois plus élevées que celles du CPU de la Xbox, pourtant cadencé à seulement 733Mhz. Ceci s’explique par la faible taille du core – la moitié d’un Prescott 90nm – et son architecture.

En ce qui concerne le multi-threading, d’après Anandtech, qui cite Microsoft, il faudra encore attendre quatre ou cinq ans pour voir apparaître des logiciels en tirant vraiment parti. Dans un premier temps, les jeux utiliseront un ou deux threads simultanément alors que la Xbox 360 est conçue pour en gérer quatre ou six. En ce qui concerne tout le buzz fait autour des 1-2 TFLOPs de ces consoles, Anantech vous explique pourquoi c’est du 100% bullshit marketing. Dans ce cas, pourquoi Sony et Microsoft ont-ils fait ces choix ? Là-dessus, Anandtech est tout de suite moins convainquant : d’après eux, Sony n’en a rien à faire des souhaits des développeurs sachant que leur matériel domine l’industrie et le choix de MS serait motivé par le faible coût du Xenon.

Mais tout n’est pas si catastrophique : les processeurs graphiques de ces consoles sont très performants et devrait permettre de voir apparaître des jeux splendides. Finalement, l’article se conclut par une note pessimiste : ces nouvelles consoles sont conçues pour proposer de beaux graphismes mais font l’impasse sur les domaines qui pourraient apporter de réelles innovations : l’IA et les moteurs physiques, généralement à la base du gameplay. Le plus rigolo, bien le terme soit mal choisi, c’est que Chris Hecker tenait exactement le même discours lors de l’E3, dans l’indifférence la plus totale : souvenez-vous.

De son côté, un gars de Arstechnica pense que tout ça c’est des conneries et que les développeurs pestent contre les CPU multi-core car ils ont la flemme d’apprendre à les utiliser. Un raisonement simpliste, mais pas forcément erroné.

Comme vous avez été sages, voici l’article d’Anandetch qui a été censuré : [–SUITE–]Microsoft’s Xbox 360 & Sony’s PlayStation 3 – Examples of Poor CPU Performance

Learning from Generation X

The original Xbox console marked a very important step in the evolution of gaming consoles – it was the first console that was little more than a Windows PC.

It featured a 733MHz Pentium III processor with a 128KB L2 cache, paired up with a modified version of NVIDIA’s nForce chipset (modified to support Intel’s Pentium III bus instead of the Athlon XP it was designed for). The nForce chipset featured an integrated GPU, codenamed the NV2A, offering performance very similar to that of a GeForce3. The system had a 5X PC DVD drive and an 8GB IDE hard drive, and all of the controllers interfaced to the console using USB cables with a proprietary connector.

For the most part, game developers were quite pleased with the original Xbox. It offered them a much more powerful CPU, GPU and overall platform than anything had before. But as time went on, there were definitely limitations that developers ran into with the first Xbox.

One of the biggest limitations ended up being the meager 64MB of memory that the system shipped with. Developers had asked for 128MB and the motherboard even had positions silk screened for an additional 64MB, but in an attempt to control costs the final console only shipped with 64MB of memory.

The next problem is that the NV2A GPU ended up not having the fill rate and memory bandwidth necessary to drive high resolutions, which kept the Xbox from being used as a HD console.

Although Intel outfitted the original Xbox with a Pentium III/Celeron hybrid in order to improve performance yet maintain its low cost, at 733MHz that quickly became a performance bottleneck for more complex games after the console’s introduction.

The combination of GPU and CPU limitations made 30 fps a frame rate target for many games, while simpler titles were able to run at 60 fps. Split screen play on Halo would even stutter below 30 fps depending on what was happening on screen, and that was just a first-generation title. More experience with the Xbox brought creative solutions to the limitations of the console, but clearly most game developers had a wish list of things they would have liked to have seen in the Xbox successor. Similar complaints were levied against the PlayStation 2, but in some cases they were more extreme (e.g. its 4MB frame buffer).

Given that consoles are generally evolutionary, taking lessons learned in previous generations and delivering what the game developers want in order to create the next-generation of titles, it isn’t a surprise to see that a number of these problems are fixed in the Xbox 360 and PlayStation 3.

One of the most important changes with the new consoles is that system memory has been bumped from 64MB on the original Xbox to a whopping 512MB on both the Xbox 360 and the PlayStation 3. For the Xbox, that’s a factor of 8 increase, and over 12x the total memory present on the PlayStation 2.

The other important improvement with the next-generation of consoles is that the GPUs have been improved tremendously. With 6 – 12 month product cycles, it’s no surprise that in the past 4 years GPUs have become much more powerful. By far the biggest upgrade these new consoles will offer, from a graphics standpoint, is the ability to support HD resolutions.

There are obviously other, less-performance oriented improvements such as wireless controllers and more ubiquitous multi-channel sound support. And with Sony’s PlayStation 3, disc capacity goes up thanks to their embracing the Blu-ray standard.

But then we come to the issue of the CPUs in these next-generation consoles, and the level of improvement they offer. Both the Xbox 360 and the PlayStation 3 offer multi-core CPUs to supposedly usher in a new era of improved game physics and reality. Unfortunately, as we have found out, the desire to bring multi-core CPUs to these consoles was made a reality at the expense of performance in a very big way.

Problems with the Architecture

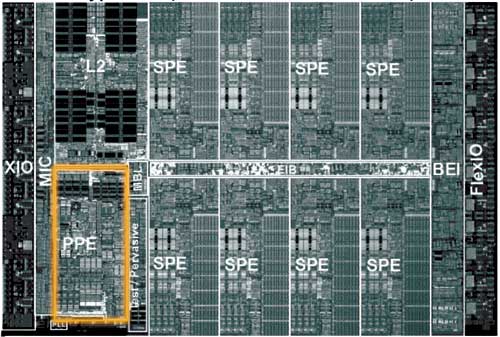

At the heart of both the Xenon and Cell processors is IBM’s custom PowerPC based core. We’ve discussed this core in our previous articles, but it is best characterized as being quite simple. The core itself is a very narrow 2-issue in-order execution core, featuring a 64KB L1 cache (32K instruction/32K data) and either a 1MB or 512KB L2 cache (for Xenon or Cell, respectively). Supporting SMT, the core can execute two threads simultaneously similar to a Hyper Threading enabled Pentium 4. The Xenon CPU is made up of three of these cores, while Cell features just one.

Each individual core is extremely small, making the 3-core Xenon CPU in the Xbox 360 smaller than a single core 90nm Pentium 4. While we don’t have exact die sizes, we’ve heard that the number is around 1/2 the size of the 90nm Prescott die.

IBM’s pitch to Microsoft was based on the peak theoretical floating point performance-per-dollar that the Xenon CPU would offer, and given Microsoft’s focus on cost savings with the Xbox 360, they took the bait.

While Microsoft and Sony have been childishly playing this flops-war, comparing the 1 TFLOPs processing power of the Xenon CPU to the 2 TFLOPs processing power of the Cell, the real-world performance war has already been lost.

Right now, from what we’ve heard, the real-world performance of the Xenon CPU is about twice that of the 733MHz processor in the first Xbox. Considering that this CPU is supposed to power the Xbox 360 for the next 4 – 5 years, it’s nothing short of disappointing. To put it in perspective, floating point multiplies are apparently 1/3 as fast on Xenon as on a Pentium 4.

The reason for the poor performance? The very narrow 2-issue in-order core also happens to be very deeply pipelined, apparently with a branch predictor that’s not the best in the business. In the end, you get what you pay for, and with such a small core, it’s no surprise that performance isn’t anywhere near the Athlon 64 or Pentium 4 class.

The Cell processor doesn’t get off the hook just because it only uses a single one of these horribly slow cores; the SPE array ends up being fairly useless in the majority of situations, making it little more than a waste of die space.

We mentioned before that collision detection is able to be accelerated on the SPEs of Cell, despite being fairly branch heavy. The lack of a branch predictor in the SPEs apparently isn’t that big of a deal, since most collision detection branches are basically random and can’t be predicted even with the best branch predictor. So not having a branch predictor doesn’t hurt, what does hurt however is the very small amount of local memory available to each SPE. In order to access main memory, the SPE places a DMA request on the bus (or the PPE can initiate the DMA request) and waits for it to be fulfilled. From those that have had experience with the PS3 development kits, this access takes far too long to be used in many real world scenarios. It is the small amount of local memory that each SPE has access to that limits the SPEs from being able to work on more than a handful of tasks. While physics acceleration is an important one, there are many more tasks that can’t be accelerated by the SPEs because of the memory limitation.

The other point that has been made is that even if you can offload some of the physics calculations to the SPE array, the Cell’s PPE ends up being a pretty big bottleneck thanks to its overall lackluster performance. It’s akin to having an extremely fast GPU but without a fast CPU to pair it up with.

What About Multithreading?

We of course asked the obvious question: would game developers rather have 3 slow general purpose cores, or one of those cores paired with an array of specialized SPEs? The response was unanimous, everyone we have spoken to would rather take the general purpose core approach.

Citing everything from ease of programming to the limitations of the SPEs we mentioned previously, the Xbox 360 appears to be the more developer-friendly of the two platforms according to the cross-platform developers we’ve spoken to. Despite being more developer-friendly, the Xenon CPU is still not what developers wanted.

The most ironic bit of it all is that according to developers, if either manufacturer had decided to use an Athlon 64 or a Pentium D in their next-gen console, they would be significantly ahead of the competition in terms of CPU performance.

While the developers we’ve spoken to agree that heavily multithreaded game engines are the future, that future won’t really take form for another 3 – 5 years. Even Microsoft admitted to us that all developers are focusing on having, at most, one or two threads of execution for the game engine itself – not the four or six threads that the Xbox 360 was designed for.

Even when games become more aggressive with their multithreading, targeting 2 – 4 threads, most of the work will still be done in a single thread. It won’t be until the next step in multithreaded architectures where that single thread gets broken down even further, and by that time we’ll be talking about Xbox 720 and PlayStation 4. In the end, the more multithreaded nature of these new console CPUs doesn’t help paint much of a brighter performance picture – multithreaded or not, game developers are not pleased with the performance of these CPUs.

What about all those Flops?

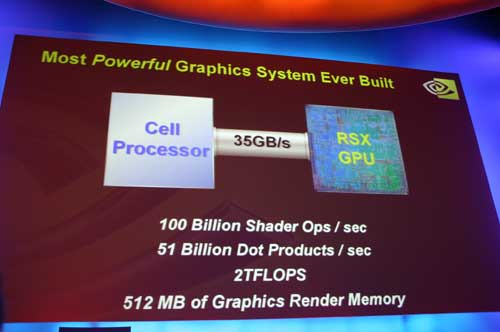

The one statement that we heard over and over again was that Microsoft was sold on the peak theoretical performance of the Xenon CPU. Ever since the announcement of the Xbox 360 and PS3 hardware, people have been set on comparing Microsoft’s figure of 1 trillion floating point operations per second to Sony’s figure of 2 trillion floating point operations per second (TFLOPs). Any AnandTech reader should know for a fact that these numbers are meaningless, but just in case you need some reasoning for why, let’s look at the facts.

First and foremost, a floating point operation can be anything; it can be adding two floating point numbers together, or it can be performing a dot product on two floating point numbers, it can even be just calculating the complement of a fp number. Anything that is executed on a FPU is fair game to be called a floating point operation.

Secondly, both floating point power numbers refer to the whole system, CPU and GPU. Obviously a GPU’s floating point processing power doesn’t mean anything if you’re trying to run general purpose code on it and vice versa. As we’ve seen from the graphics market, characterizing GPU performance in terms of generic floating point operations per second is far from the full performance story.

Third, when a manufacturer is talking about peak floating point performance there are a few things that they aren’t taking into account. Being able to process billions of operations per second depends on actually being able to have that many floating point operations to work on. That means that you have to have enough bandwidth to keep the FPUs fed, no mispredicted branches, no cache misses and the right structure of code to make sure that all of the FPUs can be fed at all times so they can execute at their peak rates. We already know that’s not the case as game developers have already told us that the Xenon CPU isn’t even in the same realm of performance as the Pentium 4 or Athlon 64. Not to mention that the requirements for hitting peak theoretical performance are always ridiculous; caches are only so big and thus there will come a time where a request to main memory is needed, and you can expect that request to be fulfilled in a few hundred clock cycles, where no floating point operations will be happening at all.

So while there may be some extreme cases where the Xenon CPU can hit its peak performance, it sure isn’t happening in any real world code.

The Cell processor is no different; given that its PPE is identical to one of the PowerPC cores in Xenon, it must derive its floating point performance superiority from its array of SPEs. So what’s the issue with 218 GFLOPs number (2 TFLOPs for the whole system)? Well, from what we’ve heard, game developers are finding that they can’t use the SPEs for a lot of tasks. So in the end, it doesn’t matter what peak theoretical performance of Cell’s SPE array is, if those SPEs aren’t being used all the time.

Another way to look at this comparison of flops is to look at integer add latencies on the Pentium 4 vs. the Athlon 64. The Pentium 4 has two double pumped ALUs, each capable of performing two add operations per clock, that’s a total of 4 add operations per clock; so we could say that a 3.8GHz Pentium 4 can perform 15.2 billion operations per second. The Athlon 64 has three ALUs each capable of executing an add every clock; so a 2.8GHz Athlon 64 can perform 8.4 billion operations per second. By this silly console marketing logic, the Pentium 4 would be almost twice as fast as the Athlon 64, and a multi-core Pentium 4 would be faster than a multi-core Athlon 64. Any AnandTech reader should know that’s hardly the case. No code is composed entirely of add instructions, and even if it were, eventually the Pentium 4 and Athlon 64 will have to go out to main memory for data, and when they do, the Athlon 64 has a much lower latency access to memory than the P4. In the end, despite what these horribly concocted numbers may lead you to believe, they say absolutely nothing about performance. The exact same situation exists with the CPUs of the next-generation consoles; don’t fall for it.

Why did Sony/MS do it?

For Sony, it doesn’t take much to see that the Cell processor is eerily similar to the Emotion Engine in the PlayStation 2, at least conceptually. Sony clearly has an idea of what direction they would like to go in, and it doesn’t happen to be one that’s aligned with much of the rest of the industry. Sony’s past successes have really come, not because of the hardware, but because of the developers and their PSX/PS2 exclusive titles. A single hot title can ship millions of consoles, and by our count, Sony has had many more of those than Microsoft had with the first Xbox.

Sony shipped around 4 times as many PlayStation 2 consoles as Microsoft did Xboxes, regardless of the hardware platform, a game developer won’t turn down working with the PS2 – the install base is just that attractive. So for Sony, the Cell processor may be strange and even undesirable for game developers, but the developers will come regardless.

The real surprise was Microsoft; with the first Xbox, Microsoft listened very closely to the wants and desires of game developers. This time around, despite what has been said publicly, the Xbox 360’s CPU architecture wasn’t what game developers had asked for.

They wanted a multi-core CPU, but not such a significant step back in single threaded performance. When AMD and Intel moved to multi-core designs, they did so at the expense of a few hundred MHz in clock speed, not by taking a step back in architecture.

We suspect that a big part of Microsoft’s decision to go with the Xenon core was because of its extremely small size. A smaller die means lower system costs, and if Microsoft indeed launches the Xbox 360 at $299 the Xenon CPU will be a big reason why that was made possible.

Another contributing factor may be the fact that Microsoft wanted to own the IP of the silicon that went into the Xbox 360. We seriously doubt that either AMD or Intel would be willing to grant them the right to make Pentium 4 or Athlon 64 CPUs, so it may have been that IBM was the only partner willing to work with Microsoft’s terms and only with this one specific core.

Regardless of the reasoning, not a single developer we’ve spoken to thinks that it was the right decision.

The Saving Grace: The GPUs

Although both manufacturers royally screwed up their CPUs, all developers have agreed that they are quite pleased with the GPU power of the next-generation consoles.

First, let’s talk about NVIDIA’s RSX in the PlayStation 3. We discussed the possibility of RSX offloading vertex processing onto the Cell processor, but more and more it seems that isn’t the case. It looks like the RSX will basically be a 90nm G70 with Turbo Cache running at 550MHz, and the performance will be quite good.

One option we didn’t discuss in the last article, was that the G70 GPU may feature a number of disabled shader pipes already to improve yield. The move to 90nm may allow for those pipes to be enabled and thus allowing for another scenario where the RSX offers higher performance at the same transistor count as the present-day G70. Sony may be hesitant to reveal the actual number of pixel and vertex pipes in the RSX because honestly they won’t know until a few months before mass production what their final yields will be.

Despite strong performance and support for 1080p, a large number of developers are targeting 720p for their PS3 titles and won’t support 1080p. Those that are simply porting current-generation games over will have no problems running at 1080p, but anyone working on a truly next-generation title won’t have the fill rate necessary to render at 1080p.

Another interesting point is that despite its lack of « free 4X AA » like the Xbox 360, in some cases it won’t matter. Titles that use longer pixel shader programs end up being bound by pixel shader performance rather than memory bandwidth, so the performance difference between no AA and 2X/4X AA may end up being quite small. Not all titles will push the RSX to the limits however, and those titles will definitely see a performance drop with AA enabled. In the end, whether the RSX’s lack of embedded DRAM matters will be entirely dependent on the game engine being developed for the platform. Games that make more extensive use of long pixel shaders will see less of an impact with AA enabled than those that are more texture bound. Game developers are all over the map on this one, so it wouldn’t be fair to characterize all of the games as falling into one category or another.

ATI’s Xenos GPU is also looking pretty good and most are expecting performance to be very similar to the RSX, but real world support for this won’t be ready for another couple of months. Developers have just recently received more final Xbox 360 hardware, and gauging performance of the actual Xenos GPU compared to the R420 based solutions in the G5 development kits will take some time. Since the original dev kits offered significantly lower performance, developers will need a bit of time to figure out what realistic limits the Xenos GPU will have.

Final Words

Just because these CPUs and GPUs are in a console doesn’t mean that we should throw away years of knowledge from the PC industry – performance doesn’t come out of thin air, and peak performance is almost never achieved. Clever marketing however, will always try to fool the consumer.

And that’s what we have here today, with the Xbox 360 and PlayStation 3. Both consoles are marketed to be much more powerful than they actually are, and from talking to numerous game developers it seems that the real world performance of these platforms isn’t anywhere near what it was supposed to be.

It looks like significant advancements in game physics won’t happen on consoles for another 4 or 5 years, although it may happen with PC games much before that.

It’s not all bad news however; the good news is that both GPUs are quite possibly the most promising part of the new consoles. With the performance that we have seen from NVIDIA’s G70, we have very high expectations for the 360 and PS3. The ability to finally run at HD resolutions in all games will bring a much needed element to console gaming.

And let’s not forget all of the other improvements to these next-generation game consoles. The CPUs, despite being relatively lackluster, will still be faster than their predecessors and increased system memory will give developers more breathing room. Then there are other improvements such as wireless controllers, better online play and updated game engines that will contribute to an overall better gaming experience.

In the end, performance could be better, the consoles aren’t what they could have been had the powers at be made some different decisions. While they will bring better quality games to market and will be better than their predecessors, it doesn’t look like they will be the end of PC gaming any more than the Xbox and PS2 were when they were launched. The two markets will continue to coexist, with consoles being much easier to deal with, and PCs offering some performance-derived advantages.

With much more powerful CPUs and, in the near future, more powerful GPUs, the PC paired with the right developers should be able to bring about that revolution in game physics and graphics we’ve been hoping for. Consoles will help accelerate the transition to multithreaded gaming, but it looks like it will take PC developers to bring about real change in things like game physics, AI and other non-visual elements of gaming.